Working Group is an LLM system designed to solve deep, real-world problems through human-in-the-loop, hierarchical agent coordination. It does this by progressively reducing uncertainty about the problem and its requirements, and about how to construct an effective solution. The system is structured to incorporate outside information automatically as needed, and to systematically partition the cumulative work context so that individual LLM calls see only what’s relevant at that moment and location. It does this by dynamically organizing work into a hierarchy of “pods” that maintain their own state, and by leveraging the division of labor inherent in the pods’ bi-layer structure. Within each pod, a multi-participant deliberation and planning process operates as the base layer, overseen by an introspective “cortex” layer that observes and performs actions in response to detected patterns/conditions.

Working Group's core drive is to reduce uncertainty about how to construct a target artifact. As it runs, a work trace evolves capturing the steps of this process; the trace then becomes input to a model that generates the target artifact. Since the trace captures the progressive clarification of the objective and path to solution, it holds exactly the information the model needs to construct the solution artifact.

The three main sources of uncertainty are:

Goal uncertainty is reduced by asking questions of the goal-giver.

World uncertainty is reduced by obtaining information from external systems, e.g. web or local file system.

Solution uncertainty is reduced through a cycle of proposing and verifying formulation candidates, refining formulations in response to small errors and taking alternate approaches in response to estimated non-viability. Knowledge of prior/related work is incorporated via e.g. web search.

The Working Group system is comprised of a supervised, dynamic pod hierarchy. Pods are stateful, isolated working units that can be spawned synchronously or asynchronously, and return artifacts as results. Pods are constructed with an objective describing an artifact it should construct, a number of rounds to run for, and a set of agent configs (defining names, system prompts, tools, etc).

The base layer activity of a pod is controlled by a scheduler that decides which agent to run at every tick of the system. The base layer is overseen by a secondary system called a cortex which gets scheduled after every agent turn. The cortex's behavior is determined by the set of "sentinels" it's configured with. Each cortex sentinel observes the base layer for a single pattern; when the pattern is detected it reacts by executing an action that affects the pod's operation in some way (e.g. pausing to clarify objective, injecting a message into the base layer, updating an artifact, spawning a sub-pod).

The drive to reduce uncertainty (Goal, World, and Solution) comes out of the prompts which back the agents and the cortex sentinels; the 'drive' is actualized by mechanisms which allow pods to halt and ask questions of the user or parent pods, or to retrieve external information. Agents are prompted with an understanding of the system in which they operate; their system prompts communicate the pod objective and supply behavioral/perspectival diversity. An agent's primary input at each turn is the work trace so far, and its output (a proposed idea, a critique, the result of a tool call, etc.) is appended to the work trace after each turn. When the pod hits its round limit, or meets its objective (by deeming itself no longer uncertain about how to generate the target artifact), a cortex sentinel uses the work trace to generate the pod's target artifact.

Work traces may look a bit like a discussion (hence the name 'working group'), but in its ideal form should resemble a "solution uncertainty reduction narrative". This 'narrative' is essentially a composite chain-of-thought with contributions from diversely configured LLMs, injected outside/system info, and user input.

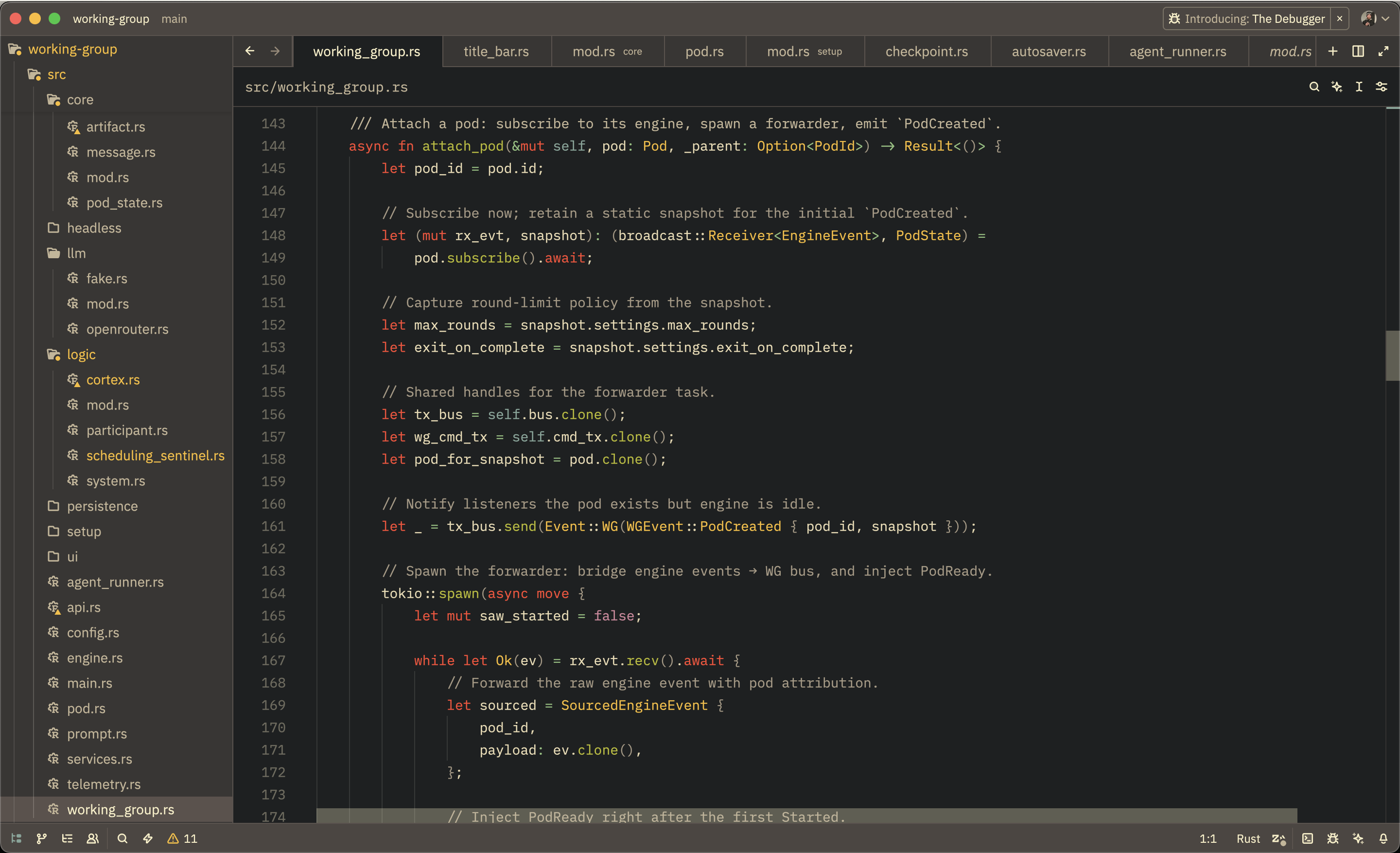

Working Group is being written as a Rust terminal utility. You run it by supplying a root pod config that defines an objective, agent roster, and cortex sentinel set; it comes with built-in defaults so you could, e.g., just supply an objective and it would fill in the rest. If you use the --headless flag it simply emits a final artifact to stdout upon completion (optionally pausing to ask user questions along the way). But it also comes with a TUI resembling a multi-agent chatroom where users can manually step through/select agent turns, speak within any pod, cancel pods, etc.

LLMs have limited attention. As their input length increases, the attention paid to the content of the input becomes increasingly diffuse and performance degrades. When asked to perform one of a large number of possible actions, the attention given to considering implications of each possibility becomes diffuse and performance degrades.

Any aspect of the system requiring something like intelligence (judgement, tool-use, solution formulation) is backed by an LLM step, where some context is computed and arranged to be the input to an LLM call. The attentional burden of that input must be managed systematically to prevent LLM performance degradation.

The core architecture is motivated by this need of systematically distributing the problem context and decision complexity among components so that each may operate with sufficient attention to perform at the maximum capability of its backing LLM.

This segmentation can be seen in the context isolation of individual 'pods' which solve sub-problems/construct sub-artifacts, and in the division of labor between the base layer activity of a pod and the 'cortex' layer which oversees it and performs actions on its behalf. The base layer can focus on the meat of the problem itself, while the cortex brings in supplementary information, modulates system parameters, drafts and stores candidate artifacts and so on. The cortex itself is divided into a number of Sentinels, which each observe the base layer for only a single aspect of the pod's operation

Init/Idle/Waiting/Running.RoundStarted/Ended, max_rounds, exit_on_complete.AwaitUserInput Command to Engine in response to Goal/World uncertainty.PASS_TOKEN handling; end-of-turn Command.ServiceHub.